Introduction

Welcome, aspiring Python pros and machine learning enthusiasts, to an exciting journey through the world of regression algorithms. In this comprehensive blog post, we’ll unravel the intricacies of two essential techniques: Linear Regression and Logistic Regression, and explore how they are implemented in Python 3.

Whether you’re on a quest to understand the foundations of machine learning or aspire to master Python’s capabilities, you’ve come to the right place. By the end of this guide, you’ll not only comprehend the theory behind these regression models but also have complete Python code examples at your disposal.

So, let’s dive deep into the world of regression and bring these concepts to life.

Understanding Regression: A Brief Overview

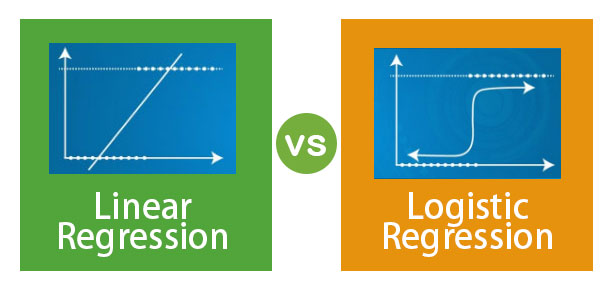

Regression analysis is a fundamental branch of supervised machine learning that deals with predicting continuous (in the case of linear regression) or discrete (in the case of logistic regression) outcomes based on input features. Both Linear and Logistic Regression are used to model the relationship between independent variables (features) and dependent variables (targets). Let’s explore the differences and use cases of these two regression types.

Linear Regression: Predicting Continuous Values

Linear Regression is the go-to technique when you need to predict a continuous numerical value. It establishes a linear relationship between the input features and the target variable. For example, predicting house prices based on square footage, number of bedrooms, and other factors is a classic use case of linear regression.

Logistic Regression: Classifying Categorical Outcomes

Logistic Regression, on the other hand, is employed when the goal is binary or multi-class classification. It predicts the probability that an input belongs to a particular class. It’s widely used in applications like spam detection (spam or not spam), medical diagnosis (disease or no disease), and sentiment analysis (positive or negative sentiment).

Setting Up Your Python Environment

Before we dive into the code, let’s ensure you have the necessary tools and libraries in place. Setting up your Python environment is a breeze.

Step 1: Installing Python 3

If you haven’t already, visit the official Python website (https://www.python.org/downloads/) and download the latest Python 3 version compatible with your operating system.

Step 2: Installing Python Libraries

Python’s power in machine learning comes from its libraries. We’ll be using NumPy, pandas, scikit-learn, and Matplotlib. Run the following command in your terminal or command prompt to install them:

pip install numpy pandas scikit-learn matplotlibWith your environment set up, we’re all set to explore Linear and Logistic Regression through Python.

Linear Regression: Predicting Continuous Values

Theory Behind Linear Regression

Linear Regression aims to find a linear relationship between input features and a continuous target variable. The equation for simple linear regression is:

y = β0 + β1 * xyis the predicted value.β0is the intercept.β1is the coefficient of the featurex.

For multiple linear regression with multiple features, the equation extends:

y = β0 + β1 * x1 + β2 * x2 + ... + βn * xnImplementing Linear Regression in Python

Let’s dive into Python code to implement Linear Regression. We’ll predict house prices based on features like square footage and number of bedrooms.

import numpy as np

import pandas as pd

from sklearn.linear_model import LinearRegression

import matplotlib.pyplot as plt

# Load the dataset (replace 'data.csv' with your dataset)

data = pd.read_csv('data.csv')

# Define features (X) and target variable (y)

X = data[['SquareFootage', 'NumBedrooms']]

y = data['Price']

# Create and train the linear regression model

model = LinearRegression()

model.fit(X, y)

# Make predictions

predictions = model.predict(X)

# Visualize the results (optional)

plt.scatter(data['SquareFootage'], y, label='Actual Prices', alpha=0.6)

plt.scatter(data['SquareFootage'], predictions, color='red', label='Predicted Prices', alpha=0.6)

plt.xlabel('Square Footage')

plt.ylabel('Price')

plt.legend()

plt.show()This code uses scikit-learn to create a Linear Regression model, fit it to the data, make predictions, and visualize the results.

Logistic Regression: Classifying Categorical Outcomes

Theory Behind Logistic Regression

Logistic Regression is all about classification. It models the probability that an input belongs to a specific category. The logistic function, also known as the sigmoid function, transforms the linear combination of input features into a probability score:

P(y=1) = 1 / (1 + e^(-z))Where:

P(y=1)is the probability of belonging to class 1.zis the linear combination of input features and coefficients.

Implementing Logistic Regression in Python

Let’s use Python to implement Logistic Regression for a binary classification problem—spam email detection.

import numpy as np

import pandas as pd

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

# Load the dataset (replace 'spam_data.csv' with your dataset)

data = pd.read_csv('spam_data.csv')

# Define features (X) and target variable (y)

X = data.drop('is_spam', axis=1)

y = data['is_spam']

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create and train the logistic regression model

model = LogisticRegression()

model.fit(X_train, y_train)

# Make predictions

y_pred = model.predict(X_test)

# Evaluate the model

accuracy = accuracy_score(y_test, y_pred)

confusion = confusion_matrix(y_test, y_pred)

report = classification_report(y_test, y_pred)

print(f'Accuracy: {accuracy}')

print('Confusion Matrix:\n', confusion)

print('Classification Report:\n', report)This code utilizes scikit-learn to build a Logistic Regression model, split the data into training and testing sets, make predictions, and evaluate the model’s performance.

Conclusion

Congratulations! You’ve embarked on a journey to demystify the world of regression in Python. We’ve explored Linear Regression for predicting continuous values and Logistic Regression for classifying categorical outcomes.

Linear Regression finds its place when you need to predict real numbers, such as house prices. On the other hand, Logistic Regression shines when you venture into the realm of classification, helping you tackle challenges like spam detection.

As you continue your Python and machine learning journey, remember that these regression techniques are just the beginning. Dive into more advanced topics like feature engineering, model tuning, and exploring other machine learning algorithms to become a true Python pro.

The world of Python is vast and exciting, and you’re well on your way to mastering it. Happy coding, and may your Python endeavors be fruitful!

Also, check out our other playlist Rasa Chatbot, Internet of things, Docker, Python Programming, MQTT, Tech News, ESP-IDF etc.

Become a member of our social family on youtube here.

Stay tuned and Happy Learning. ✌🏻😃

Happy coding, and welcome to the exciting world of logistic regression! ❤️🔥